Authors: Meghann Rhynard-Geil & Lisa Inks

Type of publication: Report

Date of publication: January 22, 2020

Introduction

The so-called Digital Revolution has transformed the humanitarian, development and peacebuilding landscape, creating new pathways for data-driven interventions, along with a broader ecosystem of relevant actors, roles and relationships. Social media platforms, search engines and online news organizations, for example, play an increasingly significant role in elections integrity, civic discourse and group identity formation, with offline impacts on peace and social cohesion.

But while digital technologies can offer many opportunities to improve people’s lives, there is also growing concern around their possible negative implications as drivers of violence, persecution and exploitation. While the spread of malicious or inaccurate information has long been a driver of conflict through in-person communication and traditional media, this landscape assessment examines the ways in which digital platforms and behaviors — specifically on social media — uniquely contribute to conflict and may require peacebuilders to adapt their existing strategies or create new approaches.

Case Study 1: Information operations — Russia’s targeting of the White Helmets in Syria

In the digital era, coordinated disinformation operations have re-emerged as a central component of Russia’s information warfare strategy in places like Syria, a country of significant geostrategic importance for Russia that has been plagued by one of the worst refugee crises in modern history. Specifically, the Russian government has made systematic use of information operations to amplify manufactured claims and false accusations against the Syrian Civil Defense, also known as the White Helmets, a Nobel-prize nominated humanitarian organization made up of more than 3,000 volunteers who are credited with saving thousands of lives in Syria. In the context of armed conflict in Syria, Russia’s government has labeled the White Helmets a terrorist organization with links to al-Qaeda and ISIS.

Weaponization via information operations

Information operations — defined as “the integrated employment … of information-related capabilities in concert with other lines of operations to influence, disrupt, corrupt, or usurp the decision-making of adversaries” — is a central component of Russia’s Information Warfare strategy. In such situations, conflict is not declared overtly, and most activities are carried out below the threshold of conventional means.

Technique and tactics

While there is some variation in the descriptions of the specific steps taken to implement information operations, a central sequence of practices across these sources makes up the “Digital Disinformation Playbook:”

- Targeting: Propagators of disinformation operations carry out intelligence collection on their target audiences via open-source channels on the web and analysis gathered by digital advertising agencies.

- Content creation: Operatives create and curate emotionally resonant or otherwise inciteful content (audio/visual, text-based information) for weaponization, including propaganda, misinformation and disinformation.

- Dissemination: Narratives are systematically disseminated through multiple means, fusing together social and traditional media, as well as offline channels such as printed materials or public rallies.

- Amplification: Propagated narratives are then amplified via botnets, inauthentic accounts, influencers, hashtag hijacking, astroturfing (imitating grass-roots actions using coordinated inauthentic accounts) and trading up the chain (planting stories in small outlets where they can then be picked up by larger ones).

- Distraction: All actors within the system work together to prevent objective sense-making within the target zone of operations by creating distractions, disrupting telecommunications infrastructure or banning social media platforms.

Impacts and implications

The Syria Campaign, with research from Graphika, estimates that “bots and trolls linked to other Russian disinformation campaigns have reached an estimated 56 million people on Twitter with posts related to the White Helmets during ten key news moments of 2016 and 2017.” These online defamation campaigns attempt to delegitimize the White Helmets’ status as a neutral and impartial humanitarian actor in an attempt to make them a legitimate target for kinetic attacks. Over 210 white helmet volunteers have been killed since 2013. Their centers “have been hit by missiles, barrel bombs and artillery bombardment 238 times between June 2016 and December 2017.”

Case Study 2: Political Manipulation — Elections in the Philippines

President Rodrigo Duterte of the Philippines has proven adept at exploiting social media for political gain, leveraging social media to reinforce positive narratives about his campaign and to defame and silence opponents and critics. The Philippines-based online news website Rappler has been the target of coordinated and sustained disinformation campaigns after it exposed the systematic use of paid trolls, bots, networks of fake accounts and contracted influencers propagating pro-Duterte narratives (including mis- and disinformation) during the 2016 presidential election.

Weaponization via political manipulation

Political manipulation is similar to information operations, but within the context of a single community or state. Political discourse is systematically manipulated by networked disinformation campaigns modeled after digital advertising approaches and operationalized through exploitative strategies and incentive structures. These practices have the power to set agendas, propagate ideas, debase political discourse and silence dissent, ultimately seeking to change the outcome of political events.

These disinformation campaigns play out in three phases:

- Design: Establishing objectives, branding, core narratives, etc.

- Mobilization: Onboarding influencers, fake account operators and grassroots intermediaries, and preparing media channels

- Implementation: Disseminating and amplifying messages and implementing other tactics such as digital black ops, #trending and signal scrambling.

Conflict Analysis

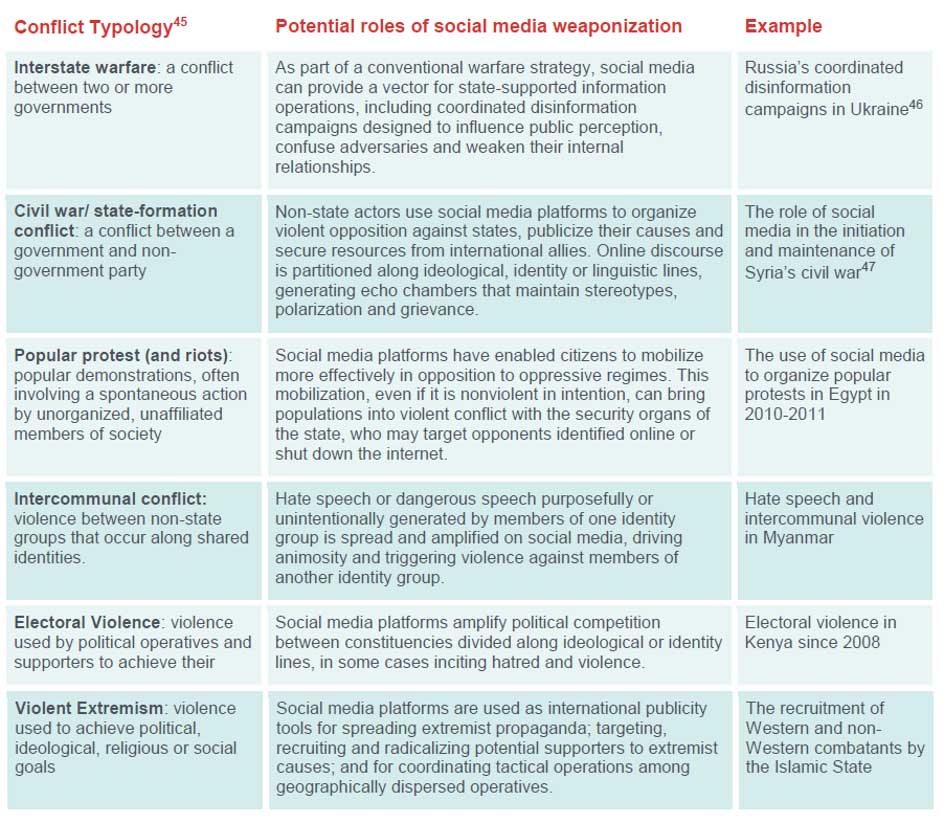

The weaponization of social media drives conflict in powerful ways that intersect with and exacerbate existing issues in specific contexts. For individuals, social media’s amplification power and highly targeted, personalized nature can exploit fundamental cognitive processes to implant dangerous information and influence susceptible people with greater efficiency than other means of communication. On a broader scale, these same qualities can polarize groups of people and lend credibility to rumors, further dividing and inciting violence between groups at risk of conflict. These drivers are evolving quickly — faster than traditional approaches to addressing information-borne threats.

Existing conflict drivers

Weaponization phenomena intersect with a diverse range of societal predispositions to conflict, increasing tensions and the risk of violence. Root causes of conflict (also called structural causes or underlying causes) are context-specific, long-term or systemic causes of violent conflict that have become built into the norms, structures and policies of a society.

The weaponization of social media drives conflict in powerful ways that intersect with and exacerbate existing issues in specific contexts. For individuals, social media’s amplification power and highly targeted, personalized nature can exploit fundamental cognitive processes to implant dangerous information and influence susceptible people with greater efficiency than other means of communication

Different types of root causes likely present varying degrees of susceptibility to malicious or inaccurate information on social media, but further research on whether some causes (i.e. identity-based and attitudinal/normative) are particularly susceptible to exacerbation by weaponized social media would be useful. Constructivist perspectives, particularly concerning the social construction of identity and the roles of language, norms, knowledge and symbols in the initiation and maintenance of conflict, might be useful in analyzing the influence of weaponized social media in more depth.

A new response framework: Phases and entry points for programming

Digital weaponization phenomena challenge the way organizations develop responses to problems in international humanitarian, development and peacebuilding domains. In particular, these phenomena add a layer of complexity to traditional conflict drivers and the ways in which peacebuilding and violence prevention efforts seek to address those drivers. The actors and pathways of weaponized social media are cross-sectoral, trans-national and evolving at rates that outpace the international system’s current response. Effective alternatives require organizations willing to navigate new terrain.

Prevention

Activities of prevention are intended to reduce the incidence of weaponization. These include regulations developed and enforced by governments, multinational bodies or industry associations, such as legislation or regulations concerning transparency, user data protection and accountability mechanisms, as well as punitive efforts that might deter future harms. For example, the European Union has developed a set of data protection rules that outlines regulations for businesses and organizations in how to process, collect and store individuals’ data and establishes rights for citizens and means for redress.

Prevention also includes the policies and technical initiatives of tech companies that impact the prevalence of weaponization on social media platforms, such as the development of company community standards, product updates that promote feedback mechanisms or the improvement of practices to remove inauthentic accounts. Civil society-led advocacy activities to influence either regulations or technology company practices also contribute to prevention. Many of these activities and the relationships required to promote prevention are relatively novel for international humanitarian, development and peacebuilding organizations.

Monitoring, detection, and assessment of threats

A wide variety of stakeholders, from intelligence organizations to civil society activists, play a role in threat monitoring, detection and assessment. However, these activities have not traditionally been a central concern of most international humanitarian, development and peacebuilding organizations. Activities under this category include information and threat mapping, the development of open-source rumor monitoring and management systems, identification and analysis of online hate speech, and social network monitoring, analysis and reporting.

An example of creating and facilitating a misinformation monitoring and management system is the Sentinel Project’s Una Hakika program in Kenya’s Tana Delta. The program was designed to counter rumors that contributed to inter-ethnic conflict by creating a platform for community members to report, verify and develop strategies to address misinformation.

Building resilience to threats

Building resilience, or increasing the ability of societies to resist weaponized social media’s worst impacts, includes both online and offline responses and aligns with traditional aspects of peacebuilding and conflict-sensitive development or humanitarian action. Strategies for building resilience to the impacts of social media include training in digital media literacy, general awareness-raising campaigns on the ways in which social media can be weaponized and general social cohesion building. The Digital Storytelling initiative in Sri Lanka is an example of a combined approach that seeks to build skills in citizen storytelling to balance some of the polarized online rhetoric, while also increasing digital literacy within communities to be more responsible consumers of online information.

An example of creating and facilitating a misinformation monitoring and management system is the Sentinel Project’s Una Hakika program in Kenya’s Tana Delta. The program was designed to counter rumors that contributed to inter-ethnic conflict by creating a platform for community members to report, verify and develop strategies to address misinformation

Peacebuilding organizations have developed a range of tools and strategies to build social cohesion within and between communities in or at risk of conflict, as well as between communities and government institutions, increasing trust and improving relationships, and strengthening the social contract. For example, Mercy Corps’ peacebuilding work in Nigeria’s Middle Belt has increased trust and perceptions of security across farmer and pastoralist groups while including specific initiatives to support religious and traditional leaders in analyzing and leading discussions aimed at reducing the impacts of hate speech in social media.

Conclusion

The disruptive nature of weaponization has significant implications for how organizations respond. On a macro level, the novelty and influence of these phenomena exposes new players, as well as various inabilities and mismatches in the network of rules and organizations that we would traditionally rely upon to respond to these risks. In this space, tech companies have emerged as entities with much influence, for example, but have not been regulated (or do not self-regulate) commensurate with the scale of harm that their platforms can generate. Governments and institutions of media find themselves struggling to keep pace with the speed at which these technologies evolve. Civil society organizations working with affected populations must consider new partnerships, employees, programs and funding sources to respond effectively.

Les Wathinotes sont soit des résumés de publications sélectionnées par WATHI, conformes aux résumés originaux, soit des versions modifiées des résumés originaux, soit des extraits choisis par WATHI compte tenu de leur pertinence par rapport au thème du Débat. Lorsque les publications et leurs résumés ne sont disponibles qu’en français ou en anglais, WATHI se charge de la traduction des extraits choisis dans l’autre langue. Toutes les Wathinotes renvoient aux publications originales et intégrales qui ne sont pas hébergées par le site de WATHI, et sont destinées à promouvoir la lecture de ces documents, fruit du travail de recherche d’universitaires et d’experts.

The Wathinotes are either original abstracts of publications selected by WATHI, modified original summaries or publication quotes selected for their relevance for the theme of the Debate. When publications and abstracts are only available either in French or in English, the translation is done by WATHI. All the Wathinotes link to the original and integral publications that are not hosted on the WATHI website. WATHI participates to the promotion of these documents that have been written by university professors and experts.